I've created another benchmark. This time, I've benchmarked the different ways of synchronizing a little code using mutual exclusion on this code.

The code to protect will be very simple. It's a simple counter :

```java

//Init

int counter = 0;

//Critical section

counter++;

```

The critical section, if not protected with synchronization system, will not function properly due to possible interleavings (read the article on synchronization if you don't know what is interleaving).

I've used 3 different synchronizers to synchronize this increment :

- synchronized block

- Semaphores (fair and unfair)

- Explicit locks (fair and unfair)

I've also used a third way to solve the problem with AtomicInteger. This is not the same as the other ways because it does not provide mutual exclusion but this is a good way to synchronize simple values, like integers or boolean, but also references. The atomicity of the operations of the AtomicInteger is made using the compare-and-swap operation of the operating system. So there is no waiting operations. So there is less context switches and result in more performing code normally.

Here is the code of these 4 ways to solve the problems :

```java

private class SynchronizedRunnable implements Runnable {

private int counter = 0;

@Override

public synchronized void run() {

counter++;

}

}

private class ReentrantLockRunnable implements Runnable {

private int counter = 0;

private Lock lock;

private ReentrantLockRunnable(boolean fair) {

super();

lock = new ReentrantLock(fair);

}

@Override

public void run() {

lock.lock();

try {

counter++;

} finally {

lock.unlock();

}

}

}

private class SemaphoreRunnable implements Runnable {

private int counter = 0;

private final Semaphore semaphore;

private SemaphoreRunnable(boolean fair) {

super();

semaphore = new Semaphore(1, fair);

}

@Override

public void run() {

try {

semaphore.acquire();

} catch (InterruptedException e) {

throw new RuntimeException(e);

}

try {

counter++;

} finally {

semaphore.release();

}

}

}

private class AtomicIntegerRunnable implements Runnable {

private AtomicInteger counter = new AtomicInteger(0);

@Override

public void run() {

counter.incrementAndGet();

}

}

```

I used Runnable to facilitate the testing and timing of the different mechanisms.

The test is made in two phases :

- Test with only one thread with a sophisticated benchmark framework. This act also as warmup for the different code.

- Test with several threads (several test with increasing number of threads). The test is made using a little code I wrote for the occasion. Each method is executed 2²³ times (8388608 times exactly).

The source code is available at the end of the post.

The test has been launched on a Ubuntu 10.04 with a Java 6 virtual machine. The computer has a 64 bit Core 2 Duo 3.16 Ghz processor and 6Go of DDR2.

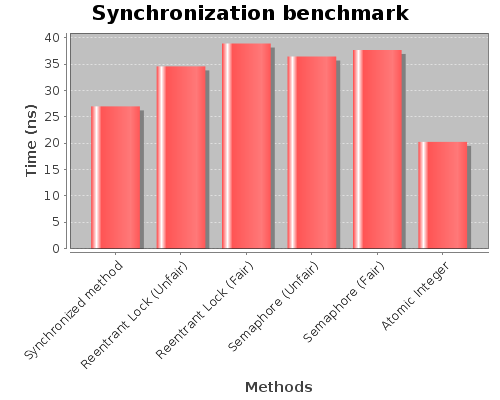

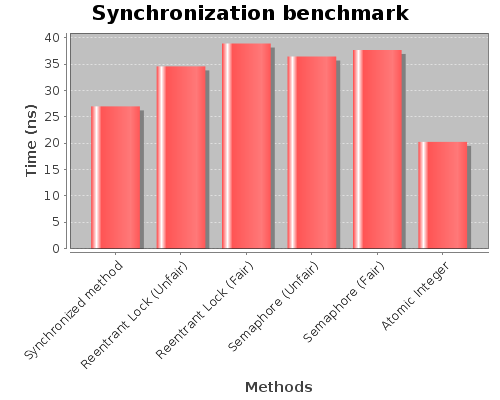

So let's see the results. First with one thread :

The first thing we see is that the AtomicInteger is the fastest version. This is because AtomicInteger do not use waiting operation, so this result in less context switches and more performances. But this is not exactly the case of the benchmark, so let's concentrate on the 5 others methods. We see that the synchronized method is the fastest and that fair methods are a little slower than unfair, but not a lot.

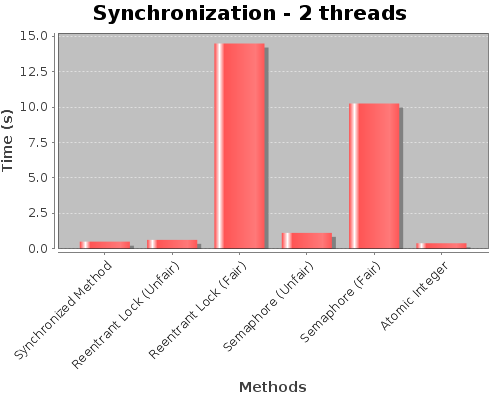

Now, we'll test the scalability of all these methods using several threads.

The first thing we see is that the AtomicInteger is the fastest version. This is because AtomicInteger do not use waiting operation, so this result in less context switches and more performances. But this is not exactly the case of the benchmark, so let's concentrate on the 5 others methods. We see that the synchronized method is the fastest and that fair methods are a little slower than unfair, but not a lot.

Now, we'll test the scalability of all these methods using several threads.

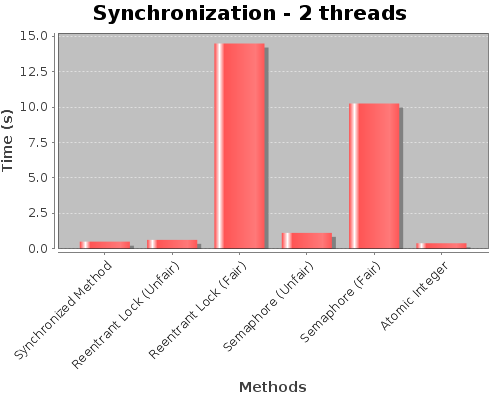

This method we can see that the fair methods are awfully slow compared to the the unfair versions. Indeed adding fairness to a synchronizer is really heavy. When fair, the threads acquire the locks in the order they ask for. With nonfair locks, barging is allowed. So when a thread try to acquire the lock and its available, it can acquire it even if there is threads waiing for the lock. It's heavier to provide fairness because there is a lot more context switches. The problem was not here with only one thread because it's always fair.

The results for the other versions are the same as with one thread.

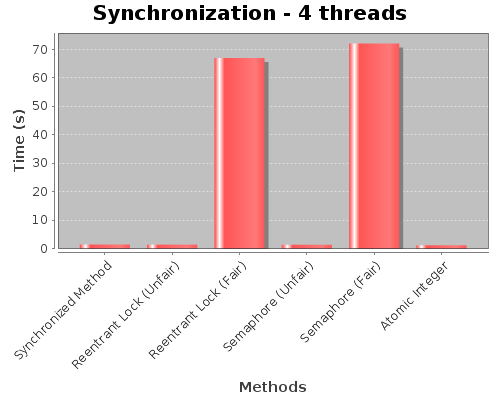

Let's add two more threads :

This method we can see that the fair methods are awfully slow compared to the the unfair versions. Indeed adding fairness to a synchronizer is really heavy. When fair, the threads acquire the locks in the order they ask for. With nonfair locks, barging is allowed. So when a thread try to acquire the lock and its available, it can acquire it even if there is threads waiing for the lock. It's heavier to provide fairness because there is a lot more context switches. The problem was not here with only one thread because it's always fair.

The results for the other versions are the same as with one thread.

Let's add two more threads :

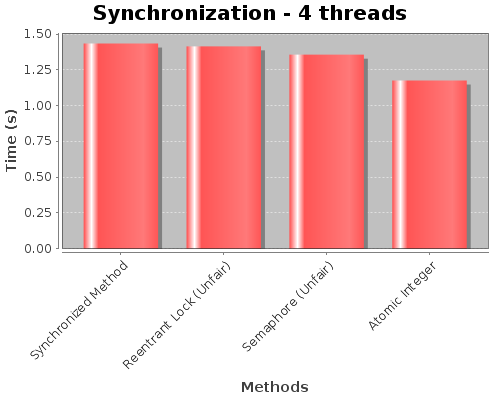

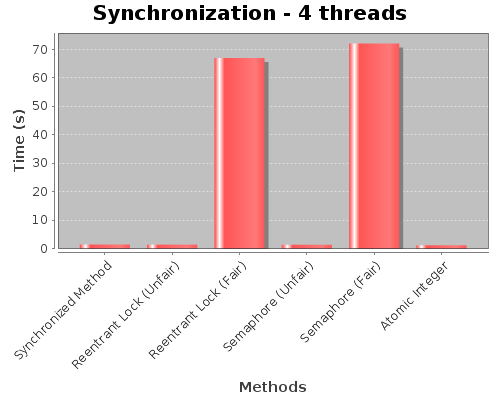

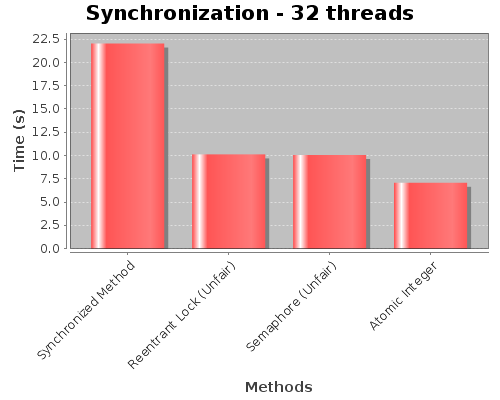

The fair versions are more and more slows when we add threads. The scalability of these methods is really bad. Let's see the graph without the fair versions :

The fair versions are more and more slows when we add threads. The scalability of these methods is really bad. Let's see the graph without the fair versions :

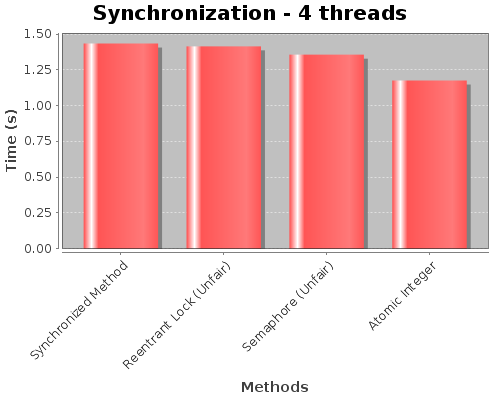

This time we can see some differences. The synchronized method is the slower this time and semaphore has a little advantage. Let's see with 8 threads :

This time we can see some differences. The synchronized method is the slower this time and semaphore has a little advantage. Let's see with 8 threads :

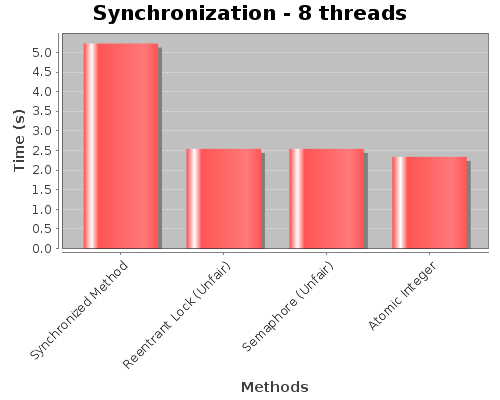

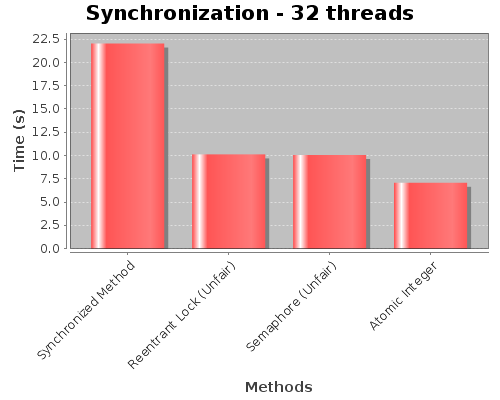

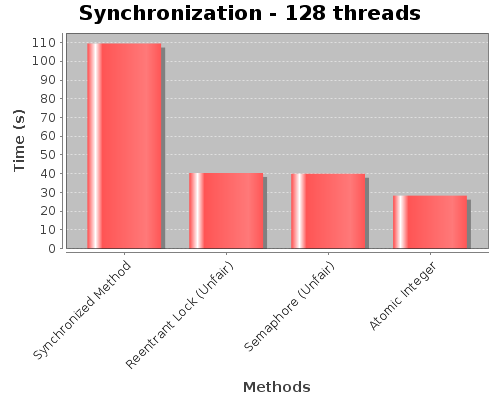

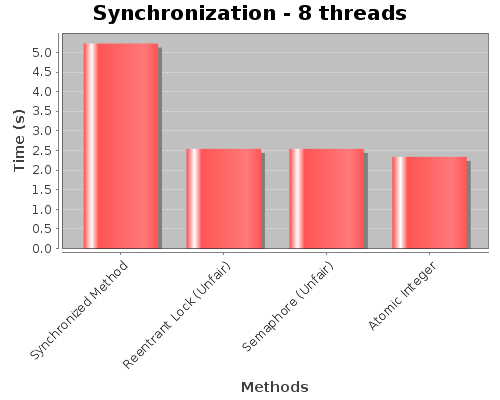

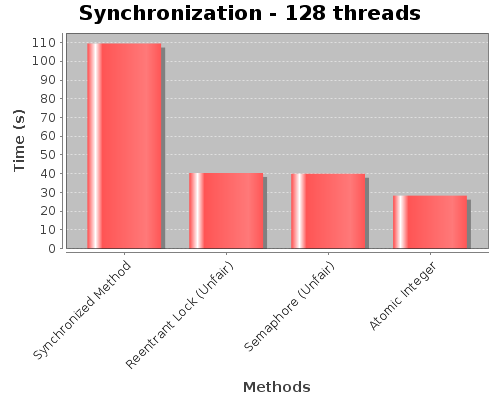

Here the synchronized method is really slower than the other methods. It appears that the algorithm of the synchronized block is less scalable than the explicit locks and semaphore versions. Let's watch what happens with other number of threads :

Here the synchronized method is really slower than the other methods. It appears that the algorithm of the synchronized block is less scalable than the explicit locks and semaphore versions. Let's watch what happens with other number of threads :

I've also made the test with other number of threads (16, 64 and 256), but the results are the same as the other.

We can made several conclusions based on the results :

I've also made the test with other number of threads (16, 64 and 256), but the results are the same as the other.

We can made several conclusions based on the results :

- Fair versions are slow. If you don't absolutely need fairness, don't use fair locks or semaphores

- Semaphores and explicit locks have the same performances. This is because the 2 classes (Semaphore and ReentrantLock) are based on the same class AbstractQueueSynchronizer that is used by almost all synchronization mechanisms of Java

- Explicit locks and semaphores are more scalable than synchronized blocks. But that depend on the virtual machine, I've seen other results indicating that the difference is a lot smaller

- The AtomicInteger is the most performing method. This class doesn't provide mutual exclusion, but provide thread safe methods to works on simple values (there is version for Long, Double, Boolean and even Reference)

So that's all for this benchmark. I hope you found it interesting.

The sources of the benchmark : Synchronization Benchmark Sources The first thing we see is that the AtomicInteger is the fastest version. This is because AtomicInteger do not use waiting operation, so this result in less context switches and more performances. But this is not exactly the case of the benchmark, so let's concentrate on the 5 others methods. We see that the synchronized method is the fastest and that fair methods are a little slower than unfair, but not a lot.

Now, we'll test the scalability of all these methods using several threads.

The first thing we see is that the AtomicInteger is the fastest version. This is because AtomicInteger do not use waiting operation, so this result in less context switches and more performances. But this is not exactly the case of the benchmark, so let's concentrate on the 5 others methods. We see that the synchronized method is the fastest and that fair methods are a little slower than unfair, but not a lot.

Now, we'll test the scalability of all these methods using several threads.

This method we can see that the fair methods are awfully slow compared to the the unfair versions. Indeed adding fairness to a synchronizer is really heavy. When fair, the threads acquire the locks in the order they ask for. With nonfair locks, barging is allowed. So when a thread try to acquire the lock and its available, it can acquire it even if there is threads waiing for the lock. It's heavier to provide fairness because there is a lot more context switches. The problem was not here with only one thread because it's always fair.

The results for the other versions are the same as with one thread.

Let's add two more threads :

This method we can see that the fair methods are awfully slow compared to the the unfair versions. Indeed adding fairness to a synchronizer is really heavy. When fair, the threads acquire the locks in the order they ask for. With nonfair locks, barging is allowed. So when a thread try to acquire the lock and its available, it can acquire it even if there is threads waiing for the lock. It's heavier to provide fairness because there is a lot more context switches. The problem was not here with only one thread because it's always fair.

The results for the other versions are the same as with one thread.

Let's add two more threads :

The fair versions are more and more slows when we add threads. The scalability of these methods is really bad. Let's see the graph without the fair versions :

The fair versions are more and more slows when we add threads. The scalability of these methods is really bad. Let's see the graph without the fair versions :

This time we can see some differences. The synchronized method is the slower this time and semaphore has a little advantage. Let's see with 8 threads :

This time we can see some differences. The synchronized method is the slower this time and semaphore has a little advantage. Let's see with 8 threads :

Here the synchronized method is really slower than the other methods. It appears that the algorithm of the synchronized block is less scalable than the explicit locks and semaphore versions. Let's watch what happens with other number of threads :

Here the synchronized method is really slower than the other methods. It appears that the algorithm of the synchronized block is less scalable than the explicit locks and semaphore versions. Let's watch what happens with other number of threads :

I've also made the test with other number of threads (16, 64 and 256), but the results are the same as the other.

We can made several conclusions based on the results :

I've also made the test with other number of threads (16, 64 and 256), but the results are the same as the other.

We can made several conclusions based on the results :