Yesterday I wondered if the copyFile method in JTheque Utils was the best method or if I need to change. So I decided to do a benchmark.

So I searched all the methods to copy a File in Java, even the bad methods and found 5 methods :

-

Native Copy : Make the copy using the cp executable of Linux

-

Naive Streams Copy : Open two streams, one to read, one to write and transfer the content byte by byte.

-

Naive Readers Copy : Open two readers, one to read, one to write and transfer the content character by character.

-

Buffered Streams Copy : Same as the first but using buffered streams instead of simple streams.

-

Buffered Readers Copy : Same as the second but using buffered readers instead of simple readers.

-

Custom Buffer Stream Copy : Same as the first but reading the file not byte by byte but using a simple byte array as buffer.

-

Custom Buffer Reader Copy : Same as the fifth but using a Reader instead of a stream.

-

Custom Buffer Buffered Stream Copy : Same as the fifth but using buffered streams.

-

Custom Buffer Buffered Reader Copy : Same as the sixth but using buffered readers.

-

NIO Buffer Copy : Using NIO Channel and using a ByteBuffer to make the transfer.

-

NIO Transfer Copy : Using NIO Channel and direct transfer from one channel to other.

-

Path (Java 7) Copy : Using the Path class of Java 7 and its method copyTo()

I think, this is the principal methods to copy a file to another file. The different methods are available at the end of the post. Pay attention that the methods with Readers only works with text files because Readers are using character by character reading so it doesn't work on a binary file like an image. Here I used a buffer size of 4096 bytes. Of course, use a higher value improve the performances of custom buffer strategies.

For the benchmark, I made the tests using different files.

- Little file (5 KB)

- Medium file (50 KB)

- Big file (5 MB)

- Fat file (50 MB)

- And an enormous file (1.3 GB) only binary

And I made the tests first using text files and then using binary files. I made the tests using in three modes :

- On the same hard disk. It's an IDE Hard Disk of 250 GB with 8 MB of cache. It's formatted in Ext4.

- Between two disk. I used the first disk and an other SATA Hard Disk of 250 GB with 16 MB of cache. It's formatted in Ext4.

- Between two disk. I used the first disk and an other SATA Hard Disk of 1 TB with 32 MB of cache. It's formatted using NTFS.

I used a benchmark framework, described here, to make the tests of all the methods. The tests have been made on my personal computer (Ubuntu 10.04 64 bits, Intel Core 2 Duo 3.16 GHz, 6 Go DDR2, SATA Hard Disks). The Java version used is a Java 7 64 bits Virtual Machine.

I've cut the post into several pages due to the length of the post :

- Introduction about the benchmark

- Benchmark on the same disk

- Benchmark between Ext4 and Ext4

- Benchmark between Ext4 and NTFS

- Conclusions about the benchmark results

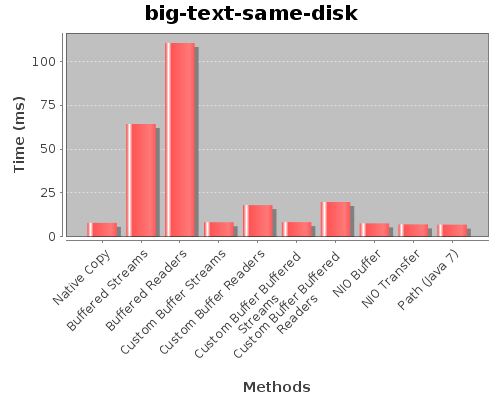

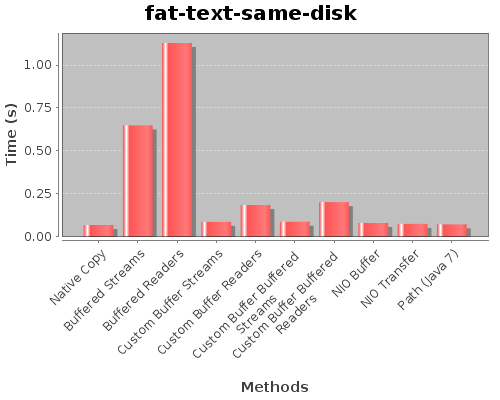

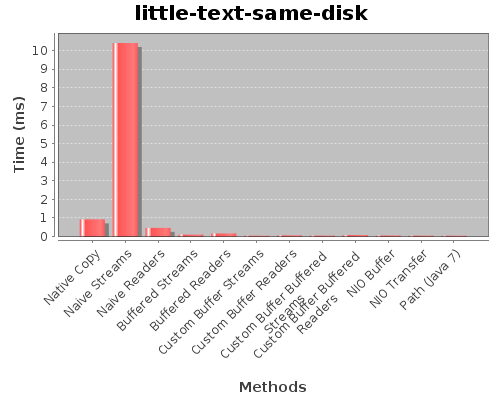

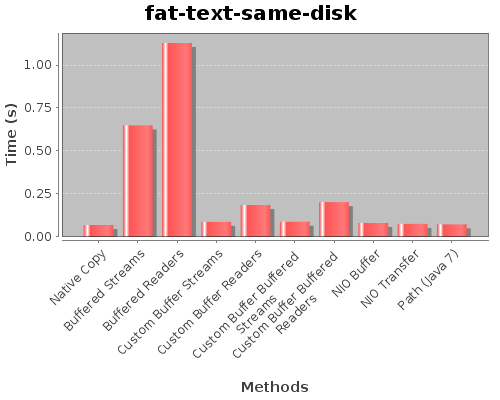

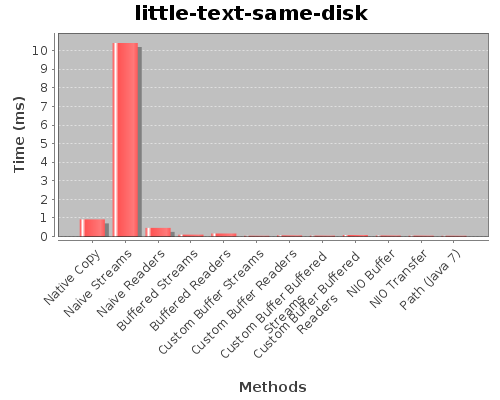

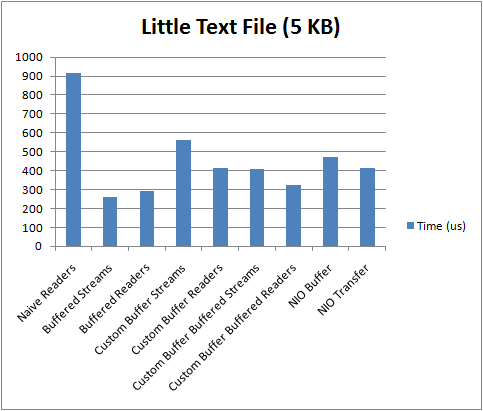

Benchmark on the same disk (Ext4)

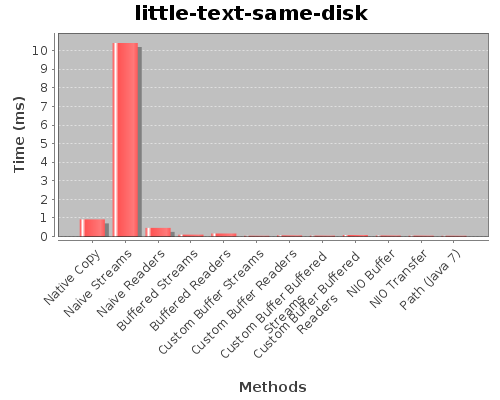

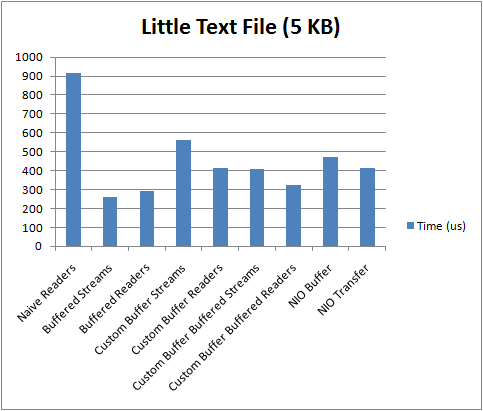

So let's start with the results of the benchmarking using the same disk.

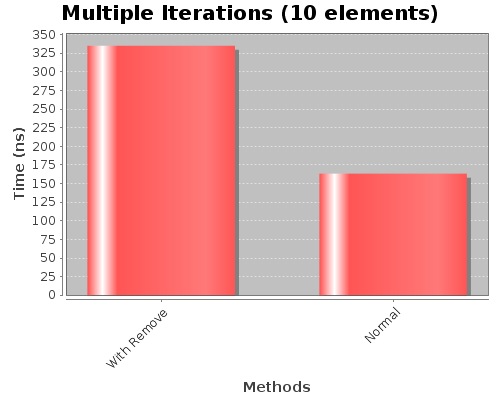

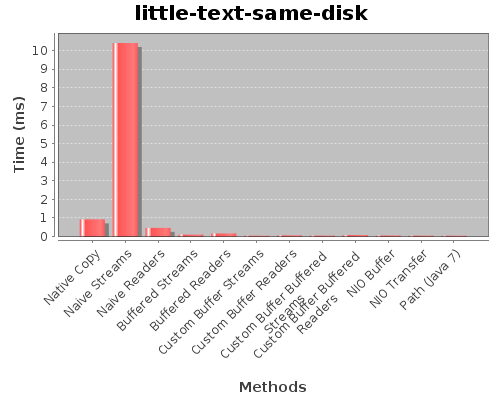

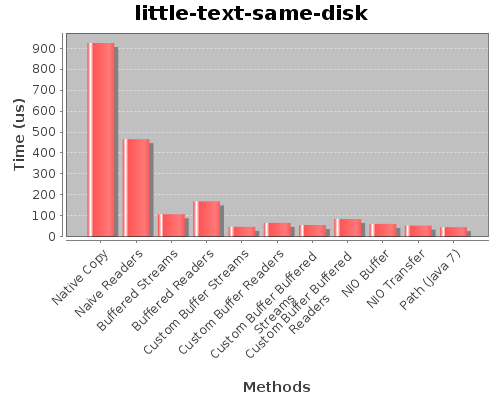

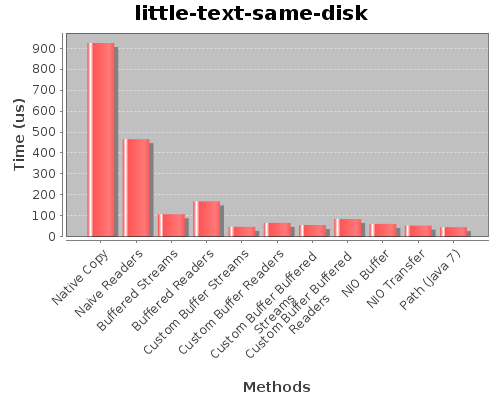

We can see that here the native and naive streams methods are a lot slower than the other methods. So lets remove the naive streams method from the graph to have a better view on the other methods :

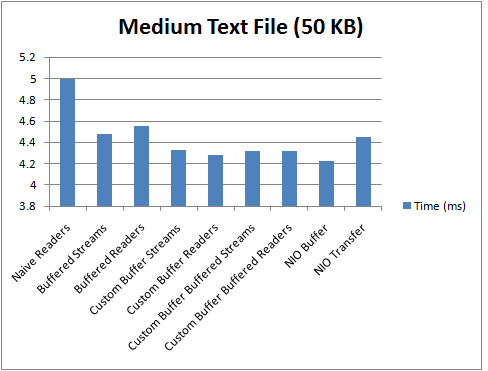

The first conclusion we can do is that the naive readers is a lot faster than the naive streams. It's because Reader use a buffer internally and this is not the case in streams. The others methods are closer, so we'll see with the next sizes what happens.

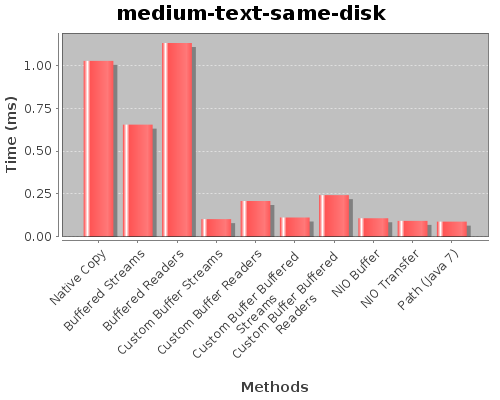

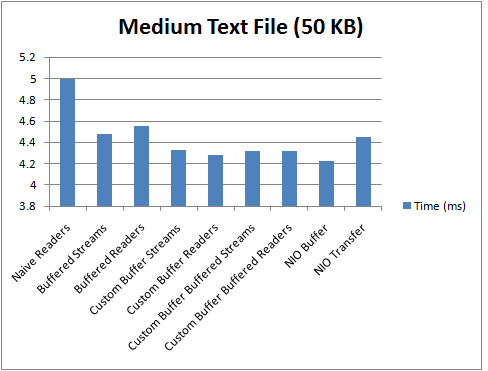

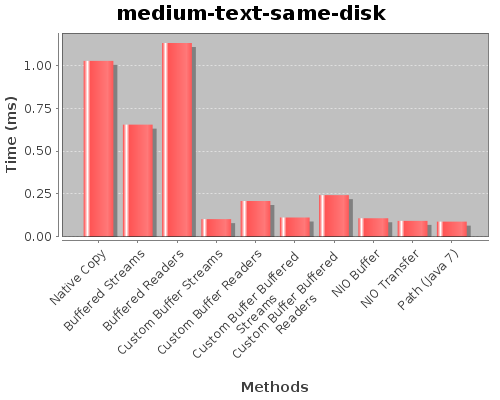

Here, we have removed the two naive methods because they are too slows compared to the others.

The readers methods are slower than the equivalent streams methods because readers are working on chars, so they must make characters conversion for every char of the file, so this is a cost to add.

Another observation is that the custom buffer strategy is faster than the buffering of the streams and than using custom buffer with a buffered stream or a single stream doesn't change anything. The same observation can be made using the custom buffer using readers, it's the same with buffered readers or not. This is logical, because with custom buffer we made 4096 (size of the buffer) times less invocations to the read method and because we ask for a complete buffer we have not a lot of I/O operations. So the buffer of the streams (or the readers) is not useful here.

The NIO Buffer, NIO Transfer and Path strategies are almost equivalent to custom buffer.

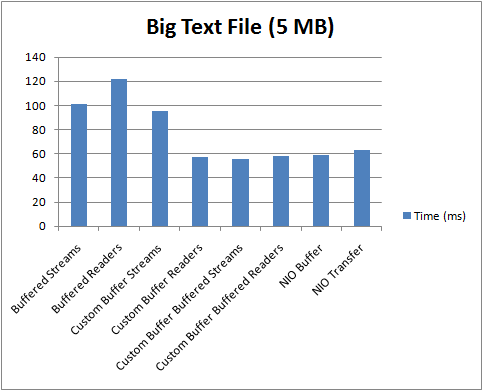

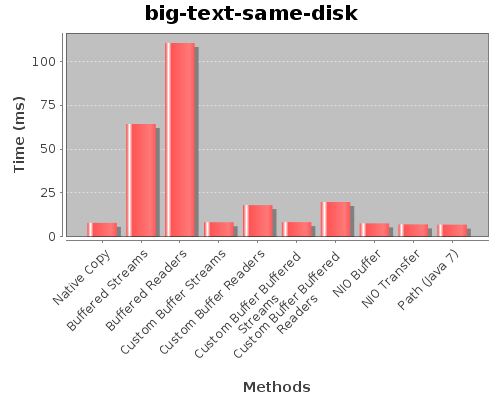

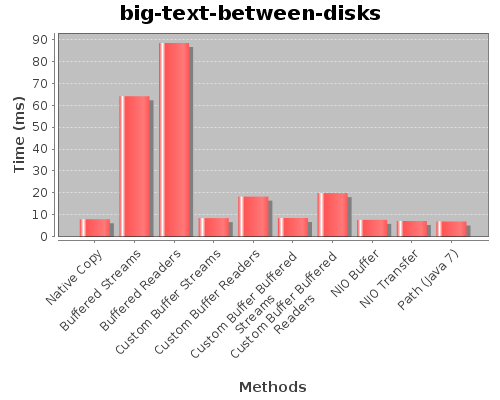

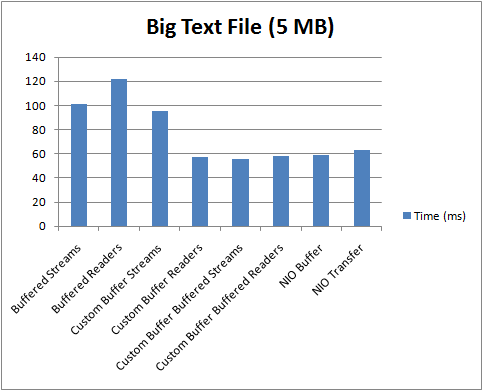

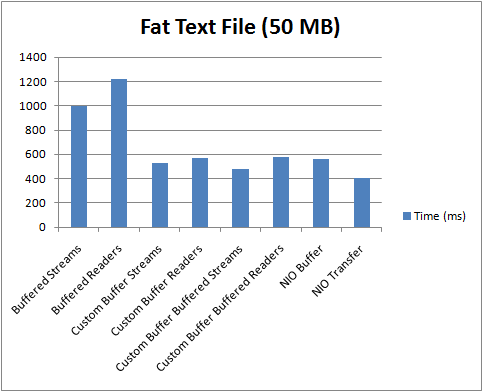

Here we see the limits of the simple buffered stream (and readers methods). And another really interesting thing we see is that the native is now faster than buffered streams and readers. Native method must start an external program and this has a cost not negligible. But the copy using the cp executable is really fast and that's because when the file size grows, the native method becomes interesting. All the other methods except the readers are almost equivalent.

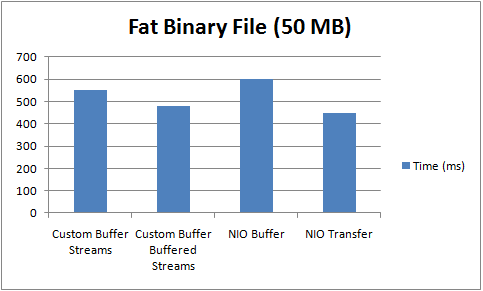

This time we can see that the native copy method is here as fast as the custom buffer streams. The fast method is the NIO Transfer method.

It's interesting to see that it doesn't take 100 ms to copy a 50 MB file.

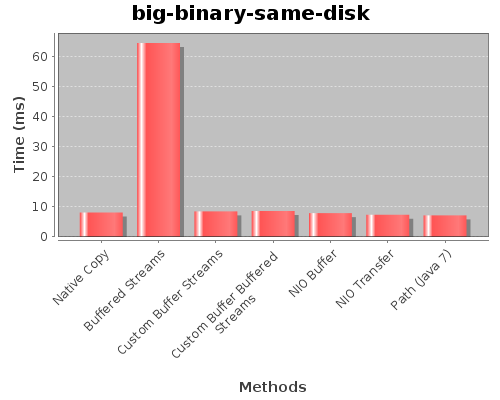

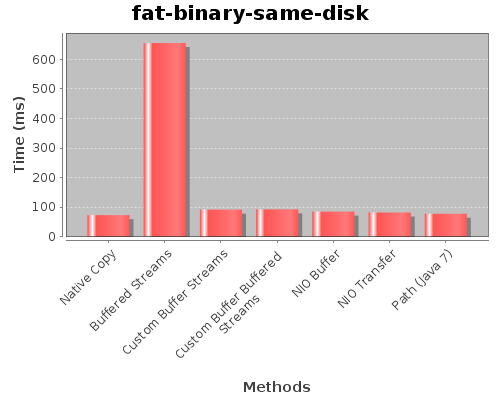

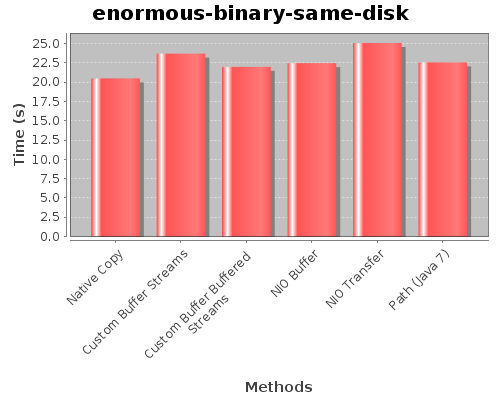

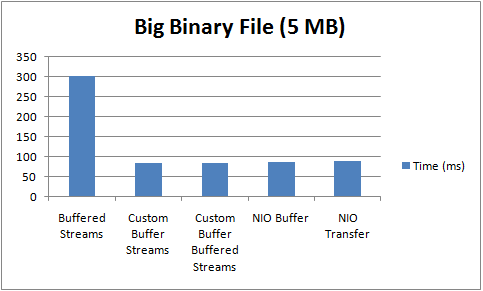

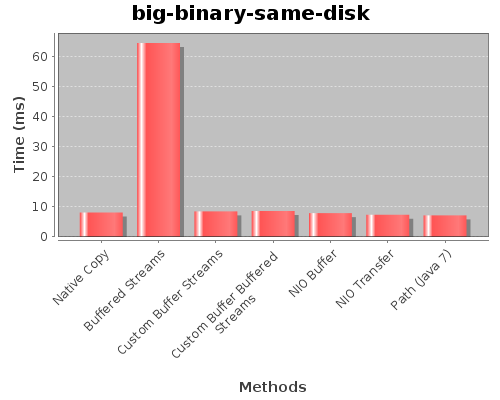

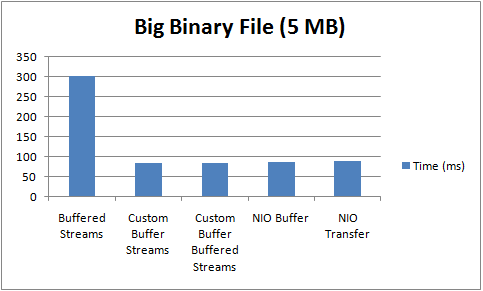

We'll see with binary now. We'll directly start with a 5 MB file.

We see exactly the same results as with a text file. The native method start to be interesting. We see precisely that the NIOand Path methods are really interesting here.

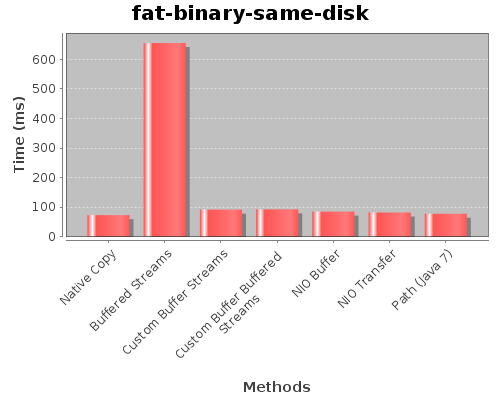

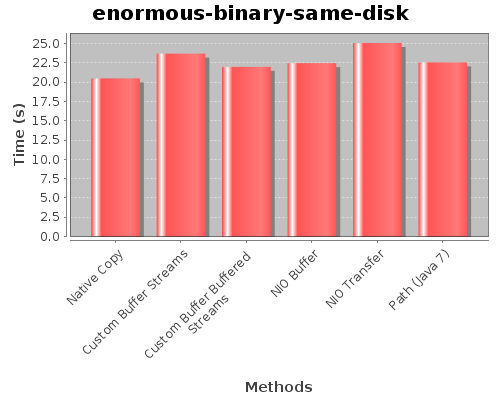

We can see that all the methods are really, really close, but the native, NIO Buffer, NIO Transfer and Path methods are the best. Just to be sure of these results, let's test with a bigger file :

Here we can see that the native method become to be the fastest one. The other method are really close. I thought the NIO Transfer will be normally faster. Due to the size of the file the benchmark has been made only a little number of times, so the number can be inaccurate. We see that he Path method is really close to the other.

The detailed informations (standard deviation, confidence intervals and other stats stuff) are available in the conclusion page.

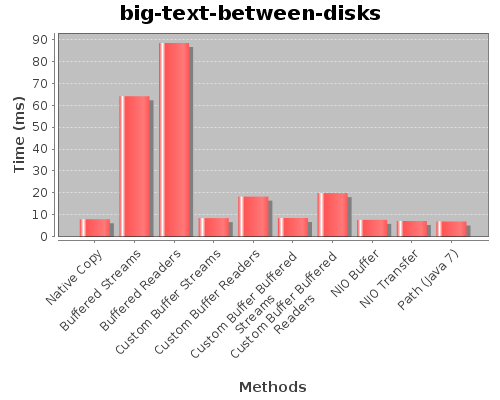

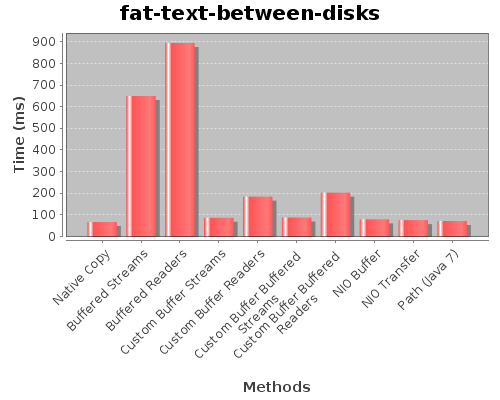

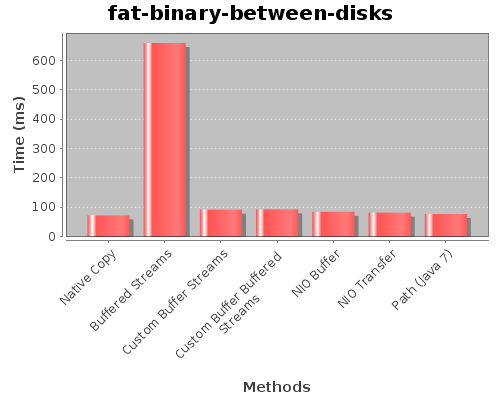

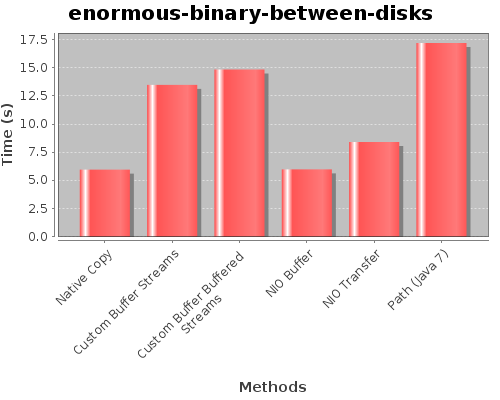

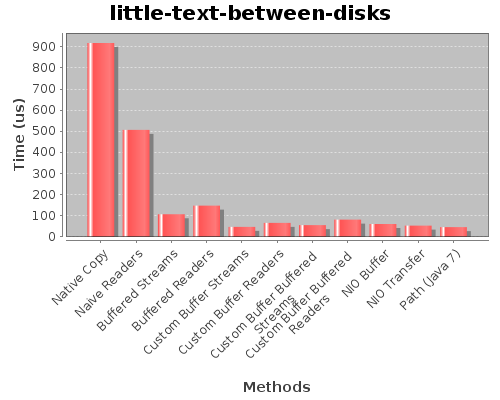

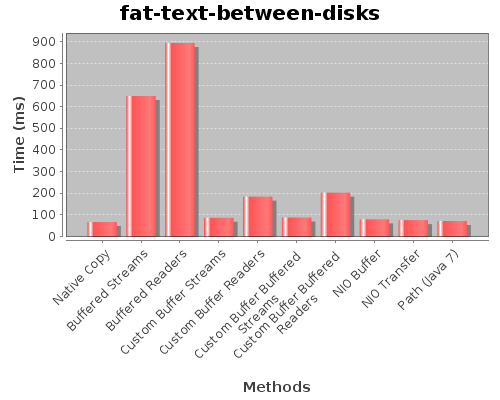

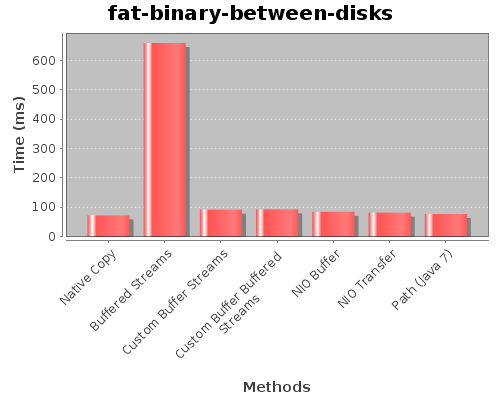

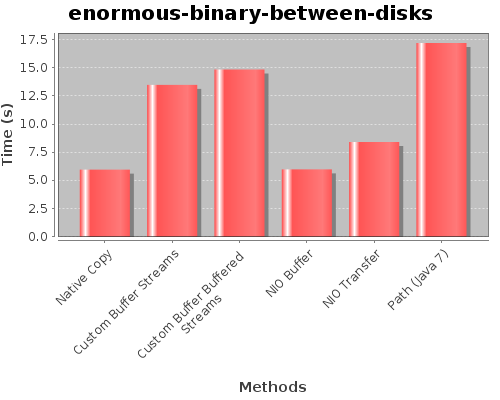

Benchmark between two disks (Ext4 -> Ext4)

Here are the results of the same tests but using two hard disk with the same formatting (Ext4).

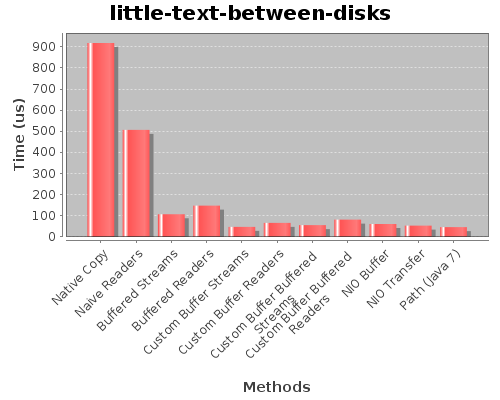

We see exactly the same results as in the first benchmark. The naive streams iscompletely useless for little files. So let's remove itand see what happens for interesting methods :

Here again, the conclusion are the same and the times are not enough big to make global conclusions.

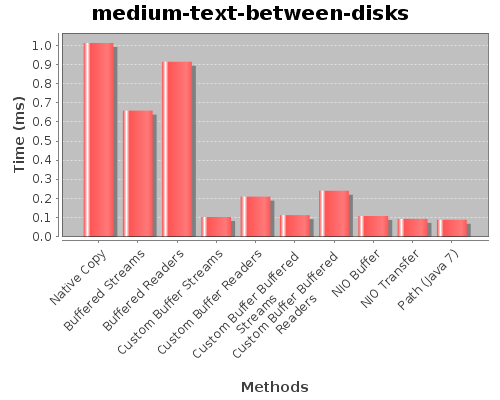

Here, we have the limits of the buffered strategy and see a real advantage of custom buffer strategy. We also see that the NIO Transfer and Path methods are taking a little advantage. But again, the times are really short.

We can see the reintroduction of the native method on the interesting methods.

So we covered the text files. If we compare the times between the first benchmark (the same disk) and this method (between two disk), we can see that the times are almost the same, just a little slower for some methods. So let's watch the big binary files :

Again, the results are close to using the same disk. So let's see with the last file :

This time, the differences are impressive. The native and NIO Buffer methods are the fastest methods. The NIO Transfer is a little slower but the Path method is a lot slower here.

This transfer is a lot faster than on the same disk. I'm not sure of the cause of these results. The only reason I can found is that the operating system can made the two things at the same time, reading on the first disk and writing on the second disk. If someone has a better conclusion, don't hesitate to comment the post.

The detailed informations (standard deviation, confidence intervals and other stats stuff) are available in the conclusion page.

Benchmark between two disks (Ext4 -> NTFS)

Here are the results of the first version of this post. The first disk is always the same, but the second disk is a NTFS. For concision, I removed some graphes. I've also removed the conclusion that are the same as the first two benchmarks. The native method is not covered in these results.

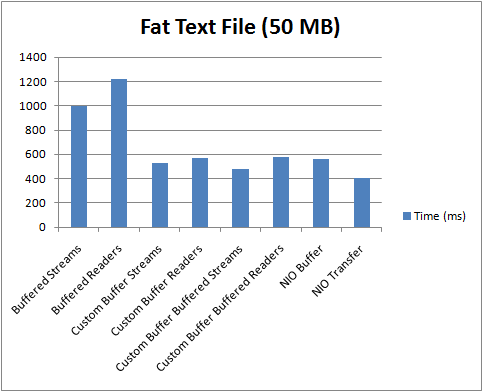

The best two versions are the Buffered Streams and Buffered Readers. Here this is because the buffered streams and readers can write the file in only one operation. Here the times are in microseconds, so there is really little differences between the methods. So the results are not really relevant.

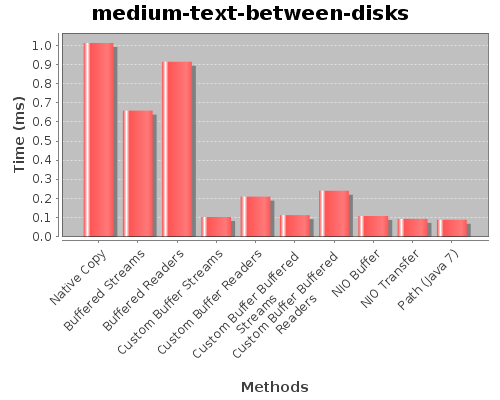

Now, let's test with a bigger file.

We can see that the versions with the Readers are a little slower than the version with the streams. This is because Readers works on character and for every read() operation, a char conversion must be made, and the same conversion must be made on the other side.

Another observation is that the custom buffer strategy is faster than the buffering of the streams and than using custom buffer with a buffered stream or a single stream doesn't change anything. The same observation can be made using the custom buffer using readers, it's the same with buffered readers or not. This is logical, because with custom buffer we made 4096 (size of the buffer) times less invocations to the read method and because we ask for a complete buffer we have not a lot of I/O operations. So the buffer of the streams (or the readers) is not useful here. The NIO buffer strategy is almost equivalent to custom buffer. And the direct transfer using NIO is here slower than the custom buffer methods. I think this is because here the cost of invoking native methods in the operating system level is higher than simply the cost of making the file copy.

Here, it's now clear that the custom buffer strategy is a better than the simple buffered streams or readers and that using custom buffer and buffered streams is really useful for bigger files. The Custom Buffer Readers method is better than Custom Buffer Streams because FileReader use a buffer internally.

And now, continue with a bigger file :

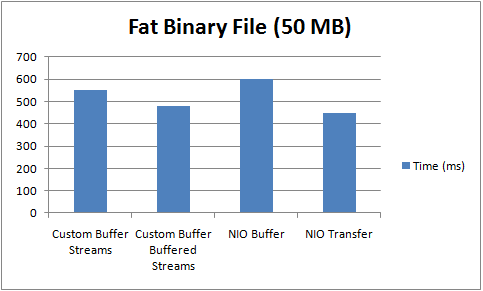

You can see that it doesn't take 500 ms to copy a 50 MB file using the custom buffer strategy and that it even doesn't take 400 ms with the NIO Transfer method. Really quick isn't it ? We can see that for a big file, the NIO Transfer start to show an advantage, we'll better see that in the binary file benchmarks. We will directly start with a big file (5 MB) for this benchmark :

So we can make the same conclusion as for the text files, of course, the buffered streams methods is not fast. The other methods are really close.

We see here again that the NIO Transfer is gaining advantages more the files is bigger.

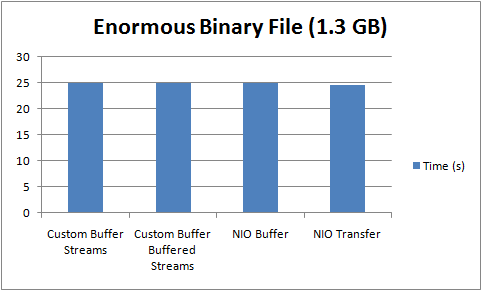

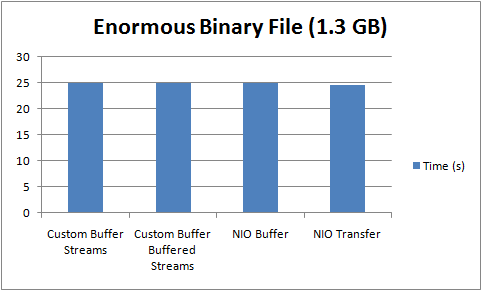

And just for the pleasure, a great file (1.3 GB) :

We see that all the methods are really close, but the NIO Transfer method has an advantage of 500 ms. It's not negligible.

A conclusion we can make is that transfering a file from Ext4 to Ext4 is a lot faster than from Ext4 to NTFS. I think it's logical because the operating system must made conversions. I think it's not because of the disk, because the NTFS disk is the faster I've.

Conclusion

In conclusion, the NIO Transfer method is the best one for big files but it's not the fastest for little files (< 5 MB). But the custom buffer strategy (and the NIO Buffer too) are also really fast methods to copy files. We've also see that the method using the native utility tools to make the copy is faster as NIO for big files (< 1 GB) but it's really slow for little files because of the cost of invoking an external program.

So perhaps, the best method is a method that make a custom buffer strategy on the little files and a NIO Transfer on the big ones and perhaps use the native executable on the really bigger ones. But it will be interesting to also make the tests on an other computer and operating system.

We can take several rules from this benchmark :

- Never made a copy of file byte by byte (or char by char)

- Prefer a buffer in your side more than in the stream to make less invocations of the read method, but don't forget the buffer in the side of the streams

- Pay attention to the size of the buffers

- Don't use char conversion if you only need to tranfer the content of a file, so don't use Reader if you need only streams.

- Don't hesitate to use channels to make file transfer, it's the fastest way to make a file transfer.

- Consider the native executable invocation only for really bigger files.

- The new Path method of Java 7 is really fast except for the transfer of an enormous file between two disks.

I hope this benchmark (and its results) interested you.

Here are the sources of the benchmark : File Copy Benchmark Version 3

Here are the informations complete for the benchmark between two disks : Complete results of first two benchmarks